- Needle Newsletter

- Posts

- First LLM of 2024 🤖

First LLM of 2024 🤖

Your Weekly Pinch of AI Insights

When OpenAI launched ChatGPT, it started a battle among the tech giants and everyone wanted to get in and launch their own Large Language Model (LLM). A few months later, their focus changed to smaller LLMs, from 1.7 Trillion parameters to 7 Billion, we have seen it all in 2023 and as we step into 2024, the models are getting smaller but efficient. Maybe this has to do something with the chip war thats going on and instead of accommodating for this shortage techies are trying to save on computing. Sam Altman in a podcast hosted by Bill Gates talked about the reduction in the cost of running GPT-3 which came down by ~40x. A great point that Sam might raise again before he asks for more money 💰

Today we have for you:

First LLM of 2024: Stable Code 3B

Microsoft overtakes apple

Access 100+ LLMs in One Fast API

JPMorgan’s DocGraphLM excels in IE

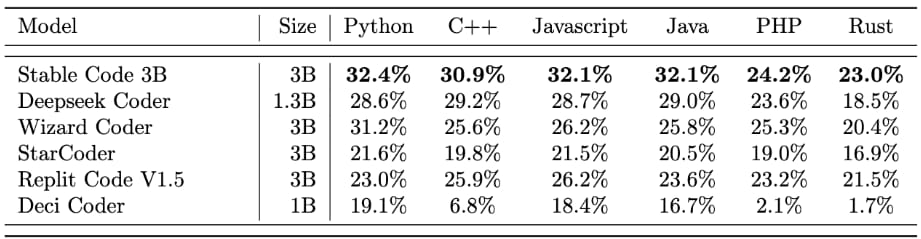

Just when I was about to upgrade my 7 years old MacBook Air, a 3 billion parameters model which can seamlessly operates without GPU on common laptops. This model is mainly designed for code completion and already performing better when compared to models like DeciCoder-1B by Anthropic and WizardCoder-3B by Hugging Face. Stable Code is pre-trained on natural language data StableLM-3B-4e1t, then fine tuned on multiple code-related datasets. Below is the chart that shows model performance across programming languages.

The dynamics of tech industry has changed many times but this might be the most interesting news of 2024 as Microsoft became the most valuable publicly traded company. Analysts attribute Microsoft's success to strong execution, demonstrated earnings growth, and a clear roadmap for artificial intelligence. The company's commitment to sustainability and ethical practices has also played a role in shaping its success story. Looking ahead, the evolving dynamics of the tech industry are likely to be influenced by factors such as quantum computing, and the continued expansion of the Internet of Things (IoT).

AI developers often struggle with handling different API signatures and managing load across multiple models, which can lead to bottlenecks and errors. Some models have unique API signatures, complicating the creation of a standardized approach and making load balancing across various providers a manual, laborious task. 'Gateway' offers a solution as an open-source, lightweight tool that simplifies working with multiple models through a fast API. It presents a universal API that seamlessly interacts with diverse models, automates load distribution, and avoids bottlenecks. Gateway ensures smooth workflows and has shown effectiveness in managing extensive loads. Now, creating an AI app is as easy as pie, minus the baking time!

Processing and understanding diverse document formats like business forms, receipts, and invoices is becoming increasingly important. Traditional methods, such as Large Language Models (LLMs) and Graph Neural Networks (GNNs), struggle to grasp the layout and relationships in documents, like how table cells connect to headers or how text flows across line breaks. To solve this, researchers have introduced a framework called ‘DocGraphLM.’ This framework uses the power of LLMs to understand the content and GNNs to understand the layout and structure. It predicts how elements in documents relate to each other in terms of direction and distance. The AI views documents as maps, grasping text and structure, and excels in extracting information and answering questions.